Terrence Tao

IPAM industrial short course in generative AI algorithms – deadline for applications closing soon

(Sharing this in my capacity of director of special projects at IPAM.)

IPAM is holding an Industrial Short Course on Generative AI Algorithms on March 5-6, 2026.

The short course is aimed at people from industry or government who want to get started in deep learning, apply deep learning to their projects, learn how to code deep learning algorithms, and upgrade their skills to the latest AI algorithms, including generative AI. The course will be taught be Professor Xavier Bresson from the Department of Computer Science at the National University of Singapore (NUS).

The course will be limited to 25 participants. The fee for this course is $2,500 for participants. Registration closes soon (Feb 5); we still have a few spots available.

A crowdsourced repository for optimization constants?

Thomas Bloom’s Erdös problem site has become a real hotbed of activity in recent months, particularly as some of the easiest of the outstanding open problems have turned out to be amenable to various AI-assisted approaches; there is now a lively community in which human contributions, AI contributions, and hybrid contributions are presented, discussed, and in some cases approved as updates to the site.

One of the lessons I draw from this is that once a well curated database of precise mathematical problems is maintained, it becomes possible for other parties to build upon it in many ways (including both AI-based and human-based approaches), to systematically make progress on some fraction of the problems.

This makes me wonder what other mathematical databases could be created to stimulate similar activity. One candidate that came to mind are “optimization constants” – constants that arise from some mathematical optimization problem of interest, for instance finding the best constant for which a certain functional inequality is satisfied.

I am therefore proposing to create a crowdsourced repository for such constants, to record the best upper and lower bounds known for any given such constant, in order to help encourage efforts (whether they be by professional mathematicians, amateur mathematicians, or research groups at a tech company) to try to improve upon the state of the art.

There are of course thousands of such constants one could consider, but just to set the discussion going, I set up a very minimal, proof of concept Github repository holding over 20 constants including:

- , the constant in a certain autocorrelation quantity relating to Sidon sets. (This constant seems to have a surprisingly nasty optimizer; see this tweet thread of Damek Davis.)

- , the constant in Erdös’ minimum overlap problem.

Here, I am taking inspiration from the Erdös problem web site and arbitrarily assigning a number to each constant, for ease of reference.

Even in this minimal state I think the repository is ready to start accepting more contributions, in the form of pull requests that add new constants, or improve the known bounds on existing constants. (I am particularly interested in constants that have an extensive literature of incremental improvements in the lower and upper bounds, and which look at least somewhat amenable to computational or AI-assisted approaches.) But I would be interested to hear feedback on how to improve the repository in other ways.

Update: Paata Ivanisvili and Damek Davis have kindly agreed to help run and expand this repository.

Rogers’ theorem on sieving

A basic problem in sieve theory is to understand what happens when we start with the integers (or some subinterval of the integers) and remove some congruence classes for various moduli . Here we shall concern ourselves with the simple setting where we are sieving the entire integers rather than an interval, and are only removing a finite number of congruence classes . In this case, the set of integers that remain after the sieving is periodic with period , so one work without loss of generality in the cyclic group . One can then ask: what is the density of the sieved set

If the were all coprime, then it is easy to see from the Chinese remainder theorem that the density is given by the product However, when the are not coprime, the situation is more complicated. One can use the inclusion-exclusion formula to get a complicated expression for the density, but it is not easy to work with. Sieve theory also supplies one with various useful upper and lower bounds (starting with the classical Bonferroni inequalities), but do not give exact formulae.In this blog post I would like to note one simple fact, due to Rogers, that one can say about this problem:

Theorem 1 (Rogers’ theorem) For fixed , the density of the sieved set is maximized when all the vanish. Thus,

Example 2 If one sieves out , , and , then only remains, giving a density of . On the other hand, if one sieves out , , and , then the remaining elements are and , giving the larger density of .

This theorem is somewhat obscure: its only appearance in print is in pages 242-244 of this 1966 text of Halberstam and Roth, where the authors write in a footnote that the result is “unpublished; communicated to the authors by Professor Rogers”. I have only been able to find it cited in three places in the literature: in this 1996 paper of Lewis, in this 2007 paper of Filaseta, Ford, Konyagin, Pomerance, and Yu (where they credit Tenenbaum for bringing the reference to their attention), and is also briefly mentioned in this 2008 paper of Ford. As far as I can tell, the result is not available online, which could explain why it is rarely cited (and also not known to AI tools). This became relevant recently with regards to Erdös problem 281, posed by Erdös and Graham in 1980, which was solved recently by Neel Somani through an AI query by an elegant ergodic theory argument. However, shortly after this solution was located, it was discovered by KoishiChan that Rogers’ theorem reduced this problem immediately to a very old result of Davenport and Erdös from 1936. Apparently, Rogers’ theorem was so obscure that even Erdös was unaware of it when posing the problem!

Modern readers may see some similarities between Rogers’ theorem and various rearrangement or monotonicity inequalites, suggesting that the result may be proven by some sort of “symmetrization” or “compression” method. This is indeed the case, and is basically Rogers’ original proof. We can modernize a bit as follows. Firstly, we can abstract into a finite cyclic abelian group , with residue classes now becoming cosets of various subgroups of . We can take complements and restate Rogers’ theorem as follows:

Theorem 3 (Rogers’ theorem, again) Let be cosets of a finite cyclic abelian group . Then

Example 4 Take , , , and . Then the cosets , , and cover the residues , with a cardinality of ; but the subgroups cover the residues , having the smaller cardinality of .

Intuitively: “sliding” the cosets together reduces the total amount of space that these cosets occupy. As pointed out in comments, the requirement of cyclicity is crucial; four lines in a finite affine plane already suffice to be a counterexample otherwise.

By factoring the cyclic group into -groups, Rogers’ theorem is an immediate consequence of two observations:

Theorem 5 (Rogers’ theorem for cyclic groups of prime order) Rogers’ theorem holds when for some prime power .

Theorem 6 (Rogers’ theorem preserved under products) If Rogers’ theorem holds for two finite abelian groups of coprime orders, then it also holds for the product .

The case of cyclic groups of prime order is trivial, because the subgroups of are totally ordered. In this case is simply the largest of the , which has the same size as and thus has lesser or equal cardinality to .

The preservation of Rogers’ theorem under products is also routine to verify. By the coprime orders of and standard group theoretic arguments (e.g., Goursat’s lemma, the Schur–Zassenhaus theorem, or the classification of finite abelian groups), one can see that any subgroup of splits as a direct product of subgroups of respectively, so the cosets also split as

Applying Rogers’ theorem for to each “vertical slice” of and summing, we see that and then applying Rogers’ theorem for to each “horizontal slice” of and summing, we obtain Combining the two inequalities, we obtain the claim.The integrated explicit analytic number theory network

Like many other areas of modern analysis, analytic number theory often relies on the convenient device of asymptotic notation to express its results. It is common to use notation such as or , for instance, to indicate a bound of the form for some unspecified constant . Such implied constants vary from line to line, and in most papers, one does not bother to compute them explicitly. This makes the papers easier both to write and to read (for instance, one can use asymptotic notation to conceal a large number of lower order terms from view), and also means that minor numerical errors (for instance, forgetting a factor of two in an inequality) typically has no major impact on the final results. However, the price one pays for this is that many results in analytic number theory are only true in asymptotic sense; a typical example is Vinogradov’s theorem that every sufficiently large odd integer can be expressed as the sum of three primes. In the first few proofs of this theorem, the threshold for “sufficiently large” was not made explicit.

There is however a small portion of the analytic number theory devoted to explicit analytic number theory estimates, in which all constants are made completely explicit (and many lower order terms are retained). For instance, whereas the prime number theorem asserts that the prime counting function is asymptotic to the logarithmic integral , in this recent paper of Fiori, Kadiri, and Swidinsky the explicit estimate

is proven for all .Such explicit results follow broadly similar strategies of proof to their non-explicit counterparts, but require a significant amount of careful book-keeping and numerical optimization; furthermore, any given explicit analytic number theory paper is likely to rely on the numerical results obtained in previous explicit analytic number theory papers. While the authors make their best efforts to avoid errors and build in some redundancy into their work, there have unfortunately been a few cases in which an explicit result stated in the published literature contained numerical errors that placed the numerical constants in several downstream applications of these papers into doubt.

Because of this propensity for error, updating any given explicit analytic number theory result to take into account computational improvements in other explicit results (such as zero-free regions for the Riemann zeta function) is not done lightly; such updates occur on the timescale of decades, and only by a small number of specialists in such careful computations. This leads to authors needing such explicit results to often be forced to rely on papers that are a decade or more out of date, with constants that they know in principle can be improved by inserting more recent explicit inputs, but do not have the domain expertise to confidently update all the numerical coefficients.

To me, this situation sounds like an appropriate application of modern AI and formalization tools – not to replace the most enjoyable aspects of human mathematical research, but rather to allow extremely tedious and time-consuming, but still necessary, mathematical tasks to be offloaded to semi-automated or fully automated tools.

Because of this, I (acting in my capacity as Director of Special Projects at IPAM) have just launched the integrated explicit analytic number theory network, a project partially hosted within the existing “Prime Number Theorem And More” (PNT+) formalization project. This project will consist of two components. The first is a crowdsourced formalization project to formalize a number of inter-related explicit analytic number theory results in Lean, such as the explicit prime number theorem of Fiori, Kadiri, and Swidinsky mentioned above; already some smaller results have been largely formalized, and we are making good progress (especially with the aid of modern AI-powered autoformalization tools) on several of the larger papers. The second, which will be run at IPAM with the financial and technical support of Math Inc., will be to extract from this network of formalized results an interactive “spreadsheet” of a large number of types of such estimates, with the ability to add or remove estimates from the network and have the numerical impact of these changes automatically propagate to other estimates in the network, similar to how changing one cell in a spreadsheet will automatically update other cells that depend on it. For instance, one could increase or decrease the numerical threshold to which the Riemann hypothesis is verified, and see the impact of this change on the explicit error terms in the prime number theorem; or one could “roll back” all the literature to a given date, and see what the best estimates on various analytic number theory expressions could still be derived from the literature available at that date. Initially, this spreadsheet will be drawn from direct adaptations of the various arguments from papers formalized within the network, but in a more ambitious second stage of the project we plan to use AI tools to modify these arguments to find more efficient relationships between the various numerical parameters than were provided in the source literature.

These more ambitious outcomes will likely take several months before a working proof of concept can be demonstrated; but in the near term I will be grateful for any contributions to the formalization side of the project, which is being coordinated on the PNT+ Zulip channel and on the github repository. We are using a github issues based system to coordinate the project, similar to how it was done for the Equational Theories Project. Any volunteer can select one of the outstanding formalization tasks on the Github issues page and “claim” it as a task to work on, eventually submitting a pull request (PR) to the repository when proposing a solution (or to “disclaim” the task if for whatever reason you are unable to complete it). As with other large formalization projects, an informal “blueprint” is currently under construction that breaks up the proofs of the main results of several explicit analytic number theory papers into bite-sized lemmas and sublemmas, most of which can be formalized independently without requiring broader knowledge of the arguments from the paper that the lemma was taken from. (A graphical display of the current formalization status of this blueprint can be found here. At the current time of writing, many portions of the blueprint are disconnected from each other, but as the formalization progresses, more linkages should be created.)

One minor innovation implemented in this project is to label each task by a “size” (ranging from XS (extra small) to XL (extra large)) that is a subjective assessment of the task difficulty, with the tasks near the XS side of the spectrum particularly suitable for beginners to Lean.

We are permitting AI use in completing the proof formalization tasks, though we require the AI use to be disclosed, and that the code is edited by humans to remove excessive bloat. (We expect some of the AI-generated code to be rather inelegant; but no proof of these explicit analytic number theory estimates, whether human-generated or AI-generated, is likely to be all that pretty or worth reading for its own sake, so the downsides of using AI-generated proofs here are lower than in other use cases.) We of course require all submissions to typecheck correctly in Lean through Github’s Continuous Integration (CI) system, so that any incorrect AI-generated code will be rejected. We are also cautiously experimenting with ways in which AI can also automatically or semi-automatically generate the formalized statements of lemmas and theorems, though here one has to be significantly more alert to the dangers of misformalizing an informally stated result, as this type of error cannot be automatically detected by a proof assistant.

We also welcome suggestions for additional papers or results in explicit analytic number theory to add to the network, and will have some blueprinting tasks in addition to the formalization tasks to convert such papers into a blueprinted sequence of small lemmas suitable for individual formalization.

Update: a personal log documenting the project may be found here.

Polynomial towers and inverse Gowers theory for bounded-exponent groups

Asgar Jamneshan, Or Shalom and I have uploaded to the arXiv our paper “ Polynomial towers and inverse Gowers theory for bounded-exponent groups“. This continues our investigation into the ergodic-theory approach to the inverse theory of Gowers norms over finite abelian groups . In this regard, our main result establishes a satisfactory (qualitative) inverse theorem for groups of bounded exponent:

Theorem 1 Let be a finite abelian group of some exponent , and let be -bounded with . Then there exists a polynomial of degree at most such that

This type of result was previously known in the case of vector spaces over finite fields (by work of myself and Ziegler), groups of squarefree order (by work of Candela, González-Sánchez, and Szegedy), and in the case (by work of Jamneshan and myself). The case , for instance, is treated by this theorem but not covered by previous results. In the aforementioned paper of Candela et al., a result similar to the above theorem was also established, except that the polynomial was defined in an extension of rather than in itself (or equivalently, correlated with a projection of a phase polynomial, rather than directly with a phase polynomial on ). This result is consistent with a conjecture of Jamneshan and myself regarding what the “right” inverse theorem should be in any finite abelian group (not necessarily of bounded exponent).

In contrast to previous work, we do not need to treat the “high characteristic” and “low characteristic” cases separately; in fact, many of the delicate algebraic questions about polynomials in low characteristic do not need to be directly addressed in our approach, although this is at the cost of making the inductive arguments rather intricate and opaque.

As mentioned above, our approach is ergodic-theoretic, deriving the above combinatorial inverse theorem from an ergodic structure theorem of Host–Kra type. The most natural ergodic structure theorem one could establish here, which would imply the above theorem, would be the statement that if is a countable abelian group of bounded exponent, and is an ergodic -system of order at most in the Host–Kra sense, then would be an Abramov system – generated by polynomials of degree at most . This statement was conjectured many years ago by Bergelson, Ziegler, and myself, and is true in many “high characteristic” cases, but unfortunately fails in low characteristic, as recently shown by Jamneshan, Shalom, and myself. However, we are able to recover a weaker version of this statement here, namely that admits an extension which is an Abramov system. (This result was previously established by Candela et al. in the model case when is a vector space over a finite field.) By itself, this weaker result would only recover a correlation with a projected phase polynomial, as in the work of Candela et al.; but the extension we construct arises as a tower of abelian extensions, and in the bounded exponent case there is an algebraic argument (hinging on a certain short exact sequence of abelian groups splitting) that allows one to map the functions in this tower back to the original combinatorial group rather than an extension thereof, thus recovering the full strength of the above theorem.

It remains to prove the ergodic structure theorem. The standard approach would be to describe the system as a Host–Kra tower

where each extension of is a compact abelian group extension by a cocycle of “type” , and them attempt to show that each such cocycle is cohomologous to a polynomial cocycle. However, this appears to be impossible in general, particularly in low characteristic, as certain key short exact sequences fail to split in the required ways. To get around this, we have to work with a different tower, extending various levels of this tower as needed to obtain additional good algebraic properties of each level that enables one to split the required short exact sequences. The precise properties needed are rather technical, but the main ones can be described informally as follows:

- We need the cocycles to obey an “exactness” property, in that there is a sharp correspondence between the type of the cocycle (or any of its components) and its degree as a polynomial cocycle. (By general nonsense, any polynomial cocycle of degree is automatically of type ; exactness, roughly speaking, asserts the converse.) Informally, the cocycles should be “as polynomial as possible”.

- The systems in the tower need to have “large spectrum” in that the set of eigenvalues of the system form a countable dense subgroup of the Pontryagin dual of the acting group (in fact we demand that a specific countable dense subgroup is represented).

- The systems need to be “pure” in the sense that the sampling map that maps polynomials on the system to polynomials on the group is injective for a.e. , with the image being a pure subgroup. Informally, this means that the problem of taking roots of a polynomial in the system is equivalent to the problem of taking roots of the corresponding polynomial on the group . In low characteristic, the root-taking problem becomes quite complicated, and we do not give a good solution to this problem either in the ergodic theory setting or the combinatorial one; however, purity at least lets one show that the two problems are (morally) equivalent to each other, which turns out to be what is actually needed to make the arguments work. There is also a technical “relative purity” condition we need to impose at each level of the extension to ensure that this purity property propagates up the tower, but I will not describe it in detail here.

It is then possible to recursively construct a tower of extensions that eventually reaches an extension of , for which the above useful properties of exactness, large spectrum, and purity are obeyed, and that the system remains Abramov at each level of the tower. This requires a lengthy process of “straightening” the cocycle by differentiating it, obtaining various “Conze–Lesigne” type equations for the derivatives, and then “integrating” those equations to place the original cocycle in a good form. At multiple stages in this process it becomes necessary to have various short exact sequences of (topological) abelian groups split, which necessitates the various good properties mentioned above. To close the induction one then has to verify that these properties can be maintained as one ascends the tower, which is a non-trivial task in itself.

The maximal length of the Erdős–Herzog–Piranian lemniscate in high degree

I’ve just uploaded to the arXiv my preprint The maximal length of the Erdős–Herzog–Piranian lemniscate in high degree. This paper resolves (in the asymptotic regime of sufficiently high degree) an old question about the polynomial lemniscates

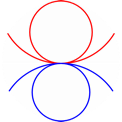

attached to monic polynomials of a given degree , and specifically the question of bounding the arclength of such lemniscates. For instance, when , the lemniscate is the unit circle and the arclength is ; this in fact turns out to be the minimum possible length amongst all (connected) lemniscates, a result of Pommerenke. However, the question of the largest lemniscate length is open. The leading candidate for the extremizer is the polynomial whose lemniscate is quite convoluted, with an arclength that can be computed asymptotically as where is the beta function.

(The images here were generated using AlphaEvolve and Gemini.) A reasonably well-known conjecture of Erdős, Herzog, and Piranian (Erdős problem 114) asserts that this is indeed the maximizer, thus for all monic polynomials of degree .

There have been several partial results towards this conjecture. For instance, Eremenko and Hayman verified the conjecture when . Asympotically, bounds of the form had been known for various such as , , or ; a significant advance was made by Fryntov and Nazarov, who obtained the asymptotically sharp upper bound

and also obtained the sharp conjecture for sufficiently close to . In that paper, the authors comment that the error could be improved, but that seemed to be the limit of their method.I recently explored this problem with the optimization tool AlphaEvolve, where I found that when I assigned this tool the task of optimizing for a given degree , that the tool rapidly converged to choosing to be equal to (up to the rotation and translation symmetries of the problem). This suggested to me that the conjecture was true for all , though of course this was far from a rigorous proof. AlphaEvolve also provided some useful visualization code for these lemniscates which I have incorporated into the paper (and this blog post), and which helped build my intuition for this problem; I view this sort of “vibe-coded visualization” as another practical use-case of present-day AI tools.

In this paper, we iteratively improve upon the Fryntov-Nazarov method to obtain the following bounds, in increasing order of strength:

- (i) .

- (ii) .

- (iii) .

- (iv) for sufficiently large .

The proof of these bounds is somewhat circuitious and technical, with the analysis from each part of this result used as a starting point for the next one. For this blog post, I would like to focus on the main ideas of the arguments.

A key difficulty is that there are relatively few tools for upper bounding the arclength of a curve; indeed, the coastline paradox already shows that curves can have infinite length even when bounded. Thus, one needs to utilize some smooth or algebraic structure on the curve to hope for good upper bounds. One possible approach is via the Crofton formula, using Bezout’s theorem to control the intersection of the curve with various lines. This is already good enough to get bounds of the form (for instance by combining it with other known tools to control the diameter of the lemniscate), but it seems challenging to use this approach to get bounds close to the optimal .

Instead, we follow Fryntov–Nazarov and utilize Stokes’ theorem to convert the arclength into an area integral. A typical identity used in that paper is

where is area measure, is the log-derivative of , is the log-derivative of , and is the region . This and the triangle inequality already lets one prove bounds of the form by controlling using the triangle inequality, the Hardy-Littlewood rearrangement inequality, and the Polya capacity inequality.But this argument does not fully capture the oscillating nature of the phase on one hand, and the oscillating nature of on the other. Fryntov–Nazarov exploited these oscillations with some additional decompositions and integration by parts arguments. By optimizing these arguments, I was able to establish an inequality of the form

where is an enlargement of (that is significantly less oscillatory, as displayed in the figure below), and are certain error terms that can be controlled by a number of standard tools (e.g., the Grönwall area theorem).One can heuristically justify (1) as follows. Suppose we work in a region where the functions , are roughly constant: , . For simplicity let us normalize to be real, and to be negative real. In order to have a non-trivial lemniscate in this region, should be close to . Because the unit circle is tangent to the line at , the lemniscate condition is then heuristically approximated by the condition that . On the other hand, the hypothesis suggests that for some amplitude , which heuristically integrates to . Writing in polar coordinates as and in Cartesian coordinates as , the condition can then be rearranged after some algebra as

If the right-hand side is much larger than in magnitude, we thus expect the lemniscate to be empty in this region; but if instead the right-hand side is much less than in magnitude, we expect the lemniscate to behave like a periodic sequence of horizontal lines of spacing . This makes the main terms on both sides of (1) approximately agree (per unit area).A graphic illustration of (1) (provided by Gemini) is shown below, where the dark spots correspond to small values of that act to “repel” (and shorten) the lemniscate. (The bright spots correspond to the critical points of , which in this case consist of six critical points at the origin and one at both of and .)

By choosing parameters appropriately, one can show that and , yielding the first bound . However, by a more careful inspection of the arguments, and in particular measuring the defect in the triangle inequality

where ranges over critical points. From some elementary geometry, one can show that the more the critical points are dispersed away from each other (in an sense), the more one can gain over the triangle inequality here; conversely, the dispersion of the critical points (after normalizing so that these critical points have mean zero) can be used to improve the control on the error terms . Optimizing this strategy leads to the second boundAt this point, the only remaining cases that need to be handled are the ones with bounded dispersion: . In this case, one can do some elementary manipulations of the factorization

to obtain some quite precise control on the asymptotics of and ; for instance, we will be able to obtain an approximation of the form with high accuracy, as long as is not too close to the origin or to critical points. This, combined with direct arclength computations, can eventually lead to the third estimate The last remaining cases to handle are those of small dispersion, . An extremely careful version of the previous analysis can now give an estimate of the shape for an absolute constant , where is a measure of how close is to (it is equal to the dispersion plus an additional term to deal with the constant term ). This establishes the final bound (for large enough), and even shows that the only extremizer is (up to translation and rotation symmetry).The Equational Theories Project: Advancing Collaborative Mathematical Research at Scale

Matthew Bolan, Joachim Breitner, Jose Brox, Nicholas Carlini, Mario Carneiro, Floris van Doorn, Martin Dvorak, Andrés Goens, Aaron Hill, Harald Husum, Hernán Ibarra Mejia, Zoltan Kocsis, Bruno Le Floch, Amir Livne Bar-on, Lorenzo Luccioli, Douglas McNeil, Alex Meiburg, Pietro Monticone, Pace P. Nielsen, Emmanuel Osalotioman Osazuwa, Giovanni Paolini, Marco Petracci, Bernhard Reinke, David Renshaw, Marcus Rossel, Cody Roux, Jérémy Scanvic, Shreyas Srinivas, Anand Rao Tadipatri, Vlad Tsyrklevich, Fernando Vaquerizo-Villar, Daniel Weber, Fan Zheng, and I have just uploaded to the arXiv our preprint The Equational Theories Project: Advancing Collaborative Mathematical Research at Scale. This is the final report for the Equational Theories Project, which was proposed in this blog post and also showcased in this subsequent blog post. The aim of this project was to see whether one could collaboratively achieve a large-scale systematic exploration of a mathematical space, which in this case was the implication graph between 4694 equational laws of magmas. A magma is a set equipped with a binary operation (or, equivalently, a multiplication table). An equational law is an equation involving this operation and a number of indeterminate variables. Some examples of equational laws, together with the number that we assigned to that law, include

- (Trivial law):

- (Singleton law):

- (Commutative law):

- (Central groupoid law):

- (Associative law):

The aim of the project was to work out which of these laws imply which others. For instance, all laws imply the trivial law , and conversely the singleton law implies all the others. On the other hand, the commutative law does not imply the associative law (because there exist magmas that are commutative but not associative), nor is the converse true. All in all, there are implications of this type to settle; most of these are relatively easy and could be resolved in a matter of minutes by an expert in college-level algebra, but prior to this project, it was impractical to actually do so in a manner that could be feasibly verified. Also, this problem is known to become undecidable for sufficiently long equational laws. Nevertheless, we were able to resolve all the implications informally after two months, and have them completely formalized in Lean after a further five months.

After a rather hectic setup process (documented in this personal log), progress came in various waves. Initially, huge swathes of implications could be resolved first by very simple-minded techniques, such as brute-force searching all small finite magmas to refute implications; then, automated theorem provers such as Vampire or Mace4 / Prover9 were deployed to handle a large fraction of the remainder. A few equations had existing literature that allowed for many implications involving them to be determined. This left a core of just under a thousand implications that did not fall to any of the “cheap” methods, and which occupied the bulk of the efforts of the project. As it turns out, all of the remaining implications were negative; the difficulty was to construct explicit magmas that obeyed one law but not another. To do this, we discovered a number of general constructions of magmas that were effective at this task. For instance:

- Linear models, in which the carrier was a (commutative or noncommutative) ring and the magma operation took the form for some coefficients , turned out to resolve many cases.

- We discovered a new invariant of an equational law, which we call the “twisting semigroup” of that law, which also allowed us to construct further examples of magmas that obeyed one law but not another , by starting with a base magma that obeyed both laws, taking a Cartesian power of that magma, and then “twisting” the magma operation by certain permutations of designed to preserve but not .

- We developed a theory of “abelian magma extensions”, similar to the notion of an abelian extension of a group, which allowed us to flexibly build new magmas out of old ones in a manner controlled by a certain “magma cohomology group ” which were tractable to compute, and again gave ways to construct magmas that obeyed one law but not another .

- Greedy methods, in which one fills out an infinite multiplication table in a greedy manner (somewhat akin to naively solving a Sudoku puzzle), subject to some rules designed to avoid collisions and maintain a law , as well as some seed entries designed to enforce a counterexample to a separate law . Despite the apparent complexity of this method, it can be automated in a manner that allowed for many outstanding implications to be refuted.

- Smarter ways to utilize automated theorem provers, such as strategically adding in additional axioms to the magma to help narrow the search space, were developed over the course of the project.

Even after applying all these general techniques, though, there were about a dozen particularly difficult implications that resisted even these more powerful methods. Several ad hoc constructions were needed in order to understand the behavior of magmas obeying such equations as E854, E906, E1323, E1516, and E1729, with the latter taking months of effort to finally solve and then formalize.

A variety of GUI interfaces were also developed to facilitate the collaboration (most notably the Equation Explorer tool mentioned above), and several side projects were also created within the project, such as the exploration of the implication graph when the magma was also restricted to be finite. In this case, we resolved all of the implications except for one (and its dual):

Problem 1 Does the law (E677) imply the law (E255) for finite magmas?

See this blueprint page for some partial results on this problem, which we were unable to resolve even after months of effort.

Interestingly, modern AI tools did not play a major role in this project (but it was largely completed in 2024, before the most recent advanced models became available); while they could resolve many implications, the older “good old-fashioned AI” of automated theorem provers were far cheaper to run and already handled the overwhelming majority of the implications that the advanced AI tools could. But I could imagine that such tools would play a more prominent role in future similar projects.

The story of Erdős problem #1026

Problem 1026 on the Erdős problem web site recently got solved through an interesting combination of existing literature, online collaboration, and AI tools. The purpose of this blog post is to try to tell the story of this collaboration, and also to supply a complete proof.

The original problem of Erdős, posed in 1975, is rather ambiguous. Erdős starts by recalling his famous theorem with Szekeres that says that given a sequence of distinct real numbers, one can find a subsequence of length which is either increasing or decreasing; and that one cannot improve the to , by considering for instance a sequence of blocks of length , with the numbers in each block decreasing, but the blocks themselves increasing. He also noted a result of Hanani that every sequence of length can be decomposed into the union of monotone sequences. He then wrote “As far as I know the following question is not yet settled. Let be a sequence of distinct numbers, determine

where the maximum is to be taken over all monotonic sequences “.This problem was added to the Erdős problem site on September 12, 2025, with a note that the problem was rather ambiguous. For any fixed , this is an explicit piecewise linear function of the variables that could be computed by a simple brute force algorithm, but Erdős was presumably seeking optimal bounds for this quantity under some natural constraint on the . The day the problem was posted, Desmond Weisenberg proposed studying the quantity , defined as the largest constant such that

for all choices of (distinct) real numbers . Desmond noted that for this formulation one could assume without loss of generality that the were positive, since deleting negative or vanishing does not increase the left-hand side and does not decrease the right-hand side. By a limiting argument one could also allow collisions between the , so long as one interpreted monotonicity in the weak sense.Though not stated on the web site, one can formulate this problem in game theoretic terms. Suppose that Alice has a stack of coins for some large . She divides the coins into piles of consisting of coins each, so that . She then passes the piles to Bob, who is allowed to select a monotone subsequence of the piles (in the weak sense) and keep all the coins in those piles. What is the largest fraction of the coins that Bob can guarantee to keep, regardless of how Alice divides up the coins? (One can work with either a discrete version of this problem where the are integers, or a continuous one where the coins can be split fractionally, but in the limit the problems can easily be seen to be equivalent.)

AI-generated images continue to be problematic for a number of reasons, but here is one such image that somewhat manages at least to convey the idea of the game:

For small , one can work out by hand. For , clearly : Alice has to put all the coins into one pile, which Bob simply takes. Similarly : regardless of how Alice divides the coins into two piles, the piles will either be increasing or decreasing, so in either case Bob can take both. The first interesting case is . Bob can again always take the two largest piles, guaranteeing himself of the coins. On the other hand, if Alice almost divides the coins evenly, for instance into piles for some small , then Bob cannot take all three piles as they are non-monotone, and so can only take two of them, allowing Alice to limit the payout fraction to be arbitrarily close to . So we conclude that .

An hour after Desmond’s comment, Stijn Cambie noted (though not in the language I used above) that a similar construction to the one above, in which Alice divides the coins into pairs that are almost even, in such a way that the longest monotone sequence is of length , gives the upper bound . It is also easy to see that is a non-increasing function of , so this gives a general bound . Less than an hour after that, Wouter van Doorn noted that the Hanani result mentioned above gives the lower bound , and posed the problem of determining the asymptotic limit of as , given that this was now known to range between and . This version was accepted by Thomas Bloom, the moderator of the Erdős problem site, as a valid interpretation of the original problem.

The next day, Stijn computed the first few values of exactly:

While the general pattern was not yet clear, this was enough data for Stijn to conjecture that , which would also imply that as . (EDIT: as later located by an AI deep research tool, this conjecture was also made in Section 12 of this 1980 article of Steele.) Stijn also described the extremizing sequences for this range of , but did not continue the calculation further (a naive computation would take runtime exponential in , due to the large number of possible subsequences to consider).The problem then lay dormant for almost two months, until December 7, 2025, in which Boris Alexeev, as part of a systematic sweep of the Erdős problems using the AI tool Aristotle, was able to get this tool to autonomously solve this conjecture in the proof assistant language Lean. The proof converted the problem to a rectangle-packing problem.

This was one further addition to a recent sequence of examples where an Erdős problem had been automatically solved in one fashion or another by an AI tool. Like the previous cases, the proof turned out to not be particularly novel. Within an hour, Koishi Chan gave an alternate proof deriving the required bound from the original Erdős-Szekeres theorem by a standard “blow-up” argument which we can give here in the Alice-Bob formulation. Take a large , and replace each pile of coins with new piles, each of size , chosen so that the longest monotone subsequence in this collection is . Among all the new piles, the longest monotone subsequence has length . Applying Erdős-Szekeres, one concludes the bound

and on canceling the ‘s, sending , and applying Cauchy-Schwarz, one obtains (in fact the argument gives for all ).Once this proof was found, it was natural to try to see if it had already appeared in the literature. AI deep research tools have successfully located such prior literature in the past, but in this case they did not succeed, and a more “old-fashioned” Google Scholar job turned up some relevant references: a 2016 paper by Tidor, Wang and Yang contained this precise result, citing an earlier paper of Wagner as inspiration for applying “blowup” to the Erdős-Szekeres theorem.

But the story does not end there! Upon reading the above story the next day, I realized that the problem of estimating was a suitable task for AlphaEvolve, which I have used recently as mentioned in this previous post. Specifically, one could task to obtain upper bounds on by directing it to produce real numbers (or integers) summing up to a fixed sum (I chose ) with a small a value of as possible. After an hour of run time, AlphaEvolve produced the following upper bounds on for , with some intriguingly structured potential extremizing solutions:

The numerical scores (divided by ) were pretty obviously trying to approximate simple rational numbers. There were a variety of ways (including modern AI) to extract the actual rational numbers they were close to, but I searched for a dedicated tool and found this useful little web page of John Cook that did the job: I could not immediately see the pattern here, but after some trial and error in which I tried to align numerators and denominators, I eventually organized this sequence into a more suggestive form: This gave a somewhat complicated but predictable conjecture for the values of the sequence . On posting this, Boris found a clean formulation of the conjecture, namely that whenever and . After a bit of effort, he also produced an explicit upper bound construction:Proposition 1 If and , then .

Proof: Consider a sequence of numbers clustered around the “red number” and “blue number” , consisting of blocks of “blue” numbers, followed by blocks of “red” numbers, and then further blocks of “blue” numbers. When , one should take all blocks to be slightly decreasing within each block, but the blue blocks should be are increasing between each other, and the red blocks should also be increasing between each other. When , all of these orderings should be reversed. The total number of elements is indeed

and the total sum is close to With this setup, one can check that any monotone sequence consists either of at most red elements and at most blue elements, or no red elements and at most blue elements, in either case giving a monotone sum that is bounded by either or giving the claim.Here is a figure illustrating the above construction in the case (obtained after starting with a ChatGPT-provided file and then manually fixing a number of placement issues):

Here is a plot of (produced by ChatGPT Pro), showing that it is basically a piecewise linear approximation to the square root function:

Shortly afterwards, Lawrence Wu clarified the connection between this problem and a square packing problem, which was also due to Erdős (Problem 106). Let be the least number such that, whenever one packs squares of sidelength into a square of sidelength , with all sides parallel to the coordinate axes, one has

Proposition 2 For any , one has

Proof: Given and , let be the maximal sum over all increasing subsequences ending in , and be the maximal sum over all decreasing subsequences ending in . For , we have either (if ) or (if ). In particular, the squares and are disjoint. These squares pack into the square , so by definition of , we have

and the claim follows.This idea of using packing to prove Erdős-Szekeres type results goes back to a 1959 paper of Seidenberg, although it was a discrete rectangle-packing argument that was not phrased in such an elegantly geometric form. It is possible that Aristotle was “aware” of the Seidenberg argument via its training data, as it had incorporated a version of this argument in its proof.

Here is an illustration of the above argument using the AlphaEvolve-provided example

for to convert it to a square packing (image produced by ChatGPT Pro):

At this point, Lawrence performed another AI deep research search, this time successfully locating a paper from just last year by Baek, Koizumi, and Ueoro, where they show that

Theorem 3 For any , one has

which, when combined with a previous argument of Praton, implies

Theorem 4 For any and with , one has

This proves the conjecture!

There just remained the issue of putting everything together. I did feed all of the above information into a large language model, which was able to produce a coherent proof of (1) assuming the results of Baek-Koizumi-Ueoro and Praton. Of course, LLM outputs are prone to hallucination, so it would be preferable to formalize that argument in Lean, but this looks quite doable with current tools, and I expect this to be accomplished shortly. But I was also able to reproduce the arguments of Baek-Koizumi-Ueoro and Praton, which I include below for completeness.

Proof: (Proof of Theorem 3, adapted from Baek-Koizumi-Ueoro) We can normalize . It then suffices to show that if we pack the length torus by axis-parallel squares of sidelength , then

Pick . Then we have a grid

inside the torus. The square, when restricted to this grid, becomes a discrete rectangle for some finite sets with By the packing condition, we have From (2) we have hence Inserting this bound and rearranging, we conclude that Taking the supremum over we conclude that so by the pigeonhole principle one of the summands is at most . Let’s say it is the former, thus In particular, the average value of is at most . But this can be computed to be , giving the claim. Similarly if it is the other sum.UPDATE: Actually, the above argument also proves Theorem 4 with only minor modifications. Nevertheless, we give the original derivation of Theorem 4 using the embedding argument of Praton below for sake of completeness.

Proof: (Proof of Theorem 4, adapted from Praton) We write with . We can rescale so that the square one is packing into is . Thus, we pack squares of sidelength into , and our task is to show that

We pick a large natural number (in particular, larger than ), and consider the three nested squares We can pack by unit squares. We can similarly pack into squares of sidelength . All in all, this produces squares, of total length Applying Theorem 3, we conclude that The right-hand side is and the left-hand side similarly evaluates to and so we simplify to Sending , we obtain the claim. One striking feature of this story for me is how important it was to have a diverse set of people, literature, and tools to attack this problem. To be able to state and prove the precise formula for required multiple observations, including some version of the following:- The sequence can be numerically computed as a sequence of rational numbers.

- When appropriately normalized and arranged, visible patterns in this sequence appear that allow one to conjecture the form of the sequence.

- This problem is a weighted version of the Erdős-Szekeres theorem.

- Among the many proofs of the Erdős-Szekeres theorem is the proof of Seidenberg in 1959, which can be interpreted as a discrete rectangle packing argument.

- This problem can be reinterpreted as a continuous square packing problem, and in fact is closely related to (a generalized axis-parallel form of) Erdős problem 106, which concerns such packings.

- The axis-parallel form of Erdős problem 106 was recently solved by Baek-Koizumi-Ueoro.

- The paper of Praton shows that Erdős Problem 106 implies the generalized version needed for this problem. This implication specializes to the axis-parallel case.

Another key ingredient was the balanced AI policy on the Erdős problem website, which encourages disclosed AI usage while strongly discouraging undisclosed use. To quote from that policy: “Comments prepared with the assistance of AI are permitted, provided (a) this is disclosed, (b) the contents (including mathematics, code, numerical data, and the existence of relevant sources) have been carefully checked and verified by the user themselves without the assistance of AI, and (c) the comment is not unreasonably long.”

Quantitative correlations and some problems on prime factors of consecutive integers

Joni Teravainen and I have uploaded to the arXiv our paper “Quantitative correlations and some problems on prime factors of consecutive integers“. This paper applies modern analytic number theory tools – most notably, the Maynard sieve and the recent correlation estimates for bounded multiplicative functions of Pilatte – to resolve (either partially or fully) some old problems of Erdős, Strauss, Pomerance, Sárközy, and Hildebrand, mostly regarding the prime counting function

and its relatives. The famous Hardy–Ramanujan and Erdős–Kac laws tell us that asymptotically for , should behave like a gaussian random variable with mean and variance both close to ; but the question of the joint distribution of consecutive values such as is still only partially understood. Aside from some lower order correlations at small primes (arising from such observations as the fact that precisely one of will be divisible by ), the expectation is that such consecutive values behave like independent random variables. As an indication of the state of the art, it was recently shown by Charamaras and Richter that any bounded observables , will be asymptotically decorrelated in the limit if one performs a logarithmic statistical averaging. Roughly speaking, this confirms the independence heuristic at the scale of the standard deviation, but does not resolve finer-grained information, such as precisely estimating the probability of the event .Our first result, answering a question of Erdős, shows that there are infinitely many for which one has the bound

for all . For , such a bound is already to be expected (though not completely universal) from the Hardy–Ramanujan law; the main difficulty is thus with the short shifts . If one only had to demonstrate this type of bound for a bounded number of , then this type of result is well within standard sieve theory methods, which can make any bounded number of shifts “almost prime” in the sense that becomes bounded. Thus the problem is that the “sieve dimension” grows (slowly) with . When writing about this problem in 1980, Erdős and Graham write “we just know too little about sieves to be able to handle such a question (“we” here means not just us but the collective wisdom (?) of our poor struggling human race)”.However, with the advent of the Maynard sieve (also sometimes referred to as the Maynard–Tao sieve), it turns out to be possible to sieve for the conditions for all simultaneously (roughly speaking, by sieving out any for which is divisible by a prime for a large ), and then performing a moment calculation analogous to the standard proof (due to Turán) of the Hardy–Ramanujan law, but weighted by the Maynard sieve. (In order to get good enough convergence, one needs to control fourth moments as well as second moments, but these are standard, if somewhat tedious, calculations).

Our second result, which answers a separate question of Erdős, establishes that the quantity

is irrational; this had recently been established by Pratt under the assumption of the prime tuples conjecture, but we are able to establish this result unconditoinally. The binary expansion of this number is of course closely related to the distribution of , but in view of the Hardy–Ramanujan law, the digit of this number is influenced by about nearby values of , which is too many correlations for current technology to handle. However, it is possible to do some “Gowers norm” type calculations to decouple things to the point where pairwise correlation information is sufficient. To see this, suppose for contradiction that this number was a rational , thus Multiplying by , we obtain some relations between shifts : Using the additive nature of , one then also gets similar relations on arithmetic progressions, for many and : Taking alternating sums of this sort of identity for various and (in analogy to how averages involving arithmetic progressions can be related to Gowers norm-type expressions over cubes), one can eventually arrive eliminate the contribution of small , and arrive at an identity of the form for many , where is a parameter (we eventually take ) and are various shifts that we will not write out explicitly here. This looks like quite a messy expression; however, one can adapt proofs of the Erdős–Kac law and show that, as long as one ignores the contribution of really large prime factors (of order , say) to the , that this sort of sum behaves like a gaussian, and in particular once one can show a suitable local limit theorem, one can contradict (1). The contribution of the large prime factors does cause a problem though, as a naive application of the triangle inequality bounds this contribution by , which is an error that overwhelms the information provided by (1). To resolve this we have to adapt the pairwise correlation estimates of Pilatte mentioned earlier to demonstrate that the these contributions are in fact . Here it is important that the error estimates of Pilatte are quite strong (of order ); previous correlation estimates of this type (such as those used in this earlier paper with Joni) turn out to be too weak for this argument to close.Our final result concerns the asymptotic behavior of the density

(we also address similar questions for and ). Heuristic arguments led Erdős, Pomerance, and Sárközy to conjecture that this quantity was asymptotically . They were able to establish an upper bound of , while Hildebrand obtained a lower bound of , due to Hildebrand. Here, we obtain the asymptotic for almost all (the limitation here is the standard one, which is that the current technology on pairwise correlation estimates either requires logarithmic averaging, or is restricted to almost all scales rather than all scales). Roughly speaking, the idea is to use the circle method to rewrite the above density in terms of expressions for various frequencies , use the estimates of Pilatte to handle the minor arc , and convert the major arc contribution back into physical space (in which and are now permitted to differ by a large amount) and use more traditional sieve theoretic methods to estimate the result.

Growth rates of sequences governed by the squarefree properties of its translates

Wouter van Doorn and I have uploaded to the arXiv our paper “Growth rates of sequences governed by the squarefree properties of its translates“. In this paper we answer a number of questions of Erdős} (Problem 1102 and Problem 1103 on the Erdős problem web site) regarding how quickly a sequence of increasing natural numbers can grow if one constrains its translates to interact with the set of squarefree numbers in various ways. For instance, Erdős defined a sequence to have “Property ” if each of its translates only intersected in finitely many points. Erdős believed this to be quite a restrictive condition on , writing “Probably a sequence having property P must increase fairly fast, but I have no results in this direction.”. Perhaps surprisingly, we show that while these sequences must be of density zero, they can in fact grow arbitrary slowly in the sense that one can have for all sufficiently large and any specified function that tends to infinity as . For instance, one can find a sequence that grows like . The density zero claim can be proven by a version of the Maier matrix method, and also follows from known moment estimates on the gaps between squarefree numbers; the latter claim is proven by a greedy construction in which one slowly imposes more and more congruence conditions on the sequence to ensure that various translates of the sequence stop being squarefree after a certain point.

Erdős also defined a somewhat complementary property , which asserts that for infinitely many , all the elements of for are square-free. Since the squarefree numbers themselves have density , it is easy to see that a sequence with property must have (upper) density at most (because it must be “admissible” in the sense of avoiding one residue class modulo for each ). Erdős observed that any sufficiently rapidly growing (admissible) sequence would obey property but beyond that, Erdős writes “I have no precise information about the rate of increase a sequence having property Q must have.”. Our results in this direction may also be surprising: we show that there exist sequences with property with density exactly (or equivalently, ). This requires a recursive sieve construction, in which one starts with an initial scale and finds a much larger number such that is squarefree for most of the squarefree numbers (and all of the squarefree numbers ). We quantify Erdős’s remark by showing that an (admissible) sequence will necessarily obey property once it grows significantly faster than , but need not obey this property if it only grows like . This is achieved through further application of sieve methods.

A third property studied by Erdős is the property of having squarefree sums, so that is squarefree for all . Erdős writes, “In fact one can find a sequence which grows exponentially. Must such a sequence really increase so fast? I do not expect that there is such a sequence of polynomial growth.” Here our results are relatively weak: we can construct such a sequence that grows like , but do not know if this is optimal; the best lower bound we can produce on the growth, coming from the large sieve, is . (Somewhat annoyingly, the precise form of the large sieve inequality we needed was not in the literature, so we have an appendix supplying it.) We suspect that further progress on this problem requires advances in inverse sieve theory.

A weaker property than squarefree sums (but stronger than property ), referred to by Erdős as property , asserts that there are infinitely many such that all elements of (not just the small ones) are square-free. Here, the situation is close to, but not quite the same, as that for property ; we show that sequences with property must have upper density strictly less than , but can have density arbitrarily close to this value.

Finally, we looked at a further question of Erdős on the size of an admissible set . Because the squarefree numbers are admissible, the maximum number of elements of an admissible set up to (OEIS A083544) is at least the number of squarefree elements up to (A013928). It was observed by Ruzsa that the former sequence is greater than the latter for infinitely many . Erdős asked, “Probably this holds for all large x. It would be of some interest to estimate A(x) as accurately as possible.”

We are able to show

with the upper bound coming from the large sieve and the lower bound from a probabilistic construction. In contrast, a classical result of Walfisz shows that Together, this implies that Erdős’s conjecture holds for all sufficiently large . Numerically, it appears that in fact this conjecture holds for all :

However, we do not currently have enough numerical data for the sequence to completely confirm the conjecture in all cases. This could potentially be a crowdsourced project (similar to the Erdős-Guy-Selfridge project reported on in this previous blog post).

Call for industry sponsors for IPAM’s RIPS program

Over the last 25 years, the Institute for pure and applied mathematics (IPAM) at UCLA (where I am now director of special projects) has run the popular Research in Industrial Projects for Students (RIPS) program every summer, in which industry sponsors with research projects are matched with talented undergraduates and a postdoctoral mentor to work at IPAM on the sponsored project for nine weeks. IPAM is now putting out a call for industry sponsors who can contribute a suitable research project for the Summer of 2026, as well as funding to cover the costs of the research; details are available here.

(Student applications for this program will open at a later date, once the list of projects is finalized.)

Climbing the cosmic distance ladder: another sample chapter

Five years ago, I announced a popular science book project with Tanya Klowden on the cosmic distance ladder, in which we released a sample draft chapter of the book, covering the “fourth rung” of the ladder, which for us meant the distances to the planets. In the intervening time, a number of unexpected events have slowed down this project significantly; but I am happy to announce that we have completed a second draft chapter, this time on the “seventh rung” of measuring distances across the Milky Way, which required the maturation of the technologies of photography and spectroscopy, as well as the dawn of the era of “big data” in the early twentieth century, as exemplified for instance by the “Harvard computers“.

We welcome feedback of course, and are continuing to work to complete the book despite the various delays. In the mean time, you can check out our instagram account for the project, or the pair of videos that Grant Sanderson (3blue1brown) produced with us on this topic, which I previously blogged about here.

Thanks to Clio Cresswell, Riley Tao, and Noah Klowden for comments and corrections.

Sum-difference exponents for boundedly many slopes, and rational complexity

I have uploaded to the arXiv my paper “Sum-difference exponents for boundedly many slopes, and rational complexity“. This is the second spinoff of my previous project with Bogdan Georgiev, Javier Gómez–Serrano, and Adam Zsolt Wagner that I recently posted about. One of the many problems we experimented using the AlphaEvolve tool with was that of computing sum-difference constants. While AlphaEvolve did modest improve one of the known lower bounds on sum-difference constants, it also revealed an asymptotic behavior to these constants that had not been previously observed, which I then gave a rigorous demonstration of in this paper.

In the original formulation of the sum-difference problem, one is given a finite subset of with some control on projections, such as

and one then asks to obtain upper bounds on the quantity This is related to Kakeya sets because if one joins a line segment between and for every , one gets a family of line segments whose set of directions has cardinality (1), but whose slices at heights have cardinality at most .Because is clearly determined by and , one can trivially get an upper bound of on (1). In 1999, Bourgain utilized what was then the very recent “Balog–Szemerédi–Gowers lemma” to improve this bound to , which gave a new lower bound of on the (Minkowski) dimension of Kakeya sets in , which improved upon the previous bounds of Tom Wolff in high dimensions. (A side note: Bourgain challenged Tom to also obtain a result of this form, but when they compared notes, Tom obtained the slightly weaker bound of , which gave Jean great satisfaction.) Currently, the best upper bound known for this quantity is .

One can get better bounds by adding more projections. For instance, if one also assumes

then one can improve the upper bound for (1) to . The arithmetic Kakeya conjecture asserts that, by adding enough projections, one can get the exponent arbitrarily close to . If one could achieve this, this would imply the Kakeya conjecture in all dimensions. Unfortunately, even with arbitrarily many projections, the best exponent we can reach asymptotically is .It was observed by Ruzsa that all of these questions can be equivalently formulated in terms of Shannon entropy. For instance, the upper bound of (1) turns out to be equivalent to the entropy inequality

holding for all discrete random variables (not necessarily independent) taking values in . In the language of this paper, we write this as Similarly we haveAs part of the AlphaEvolve experiments, we directed this tool to obtain lower bounds for for various rational numbers , defined as the best constant in the inequality

We did not figure out a way for AlphaEvolve to efficiently establish upper bounds on these quantities, so the bounds provided by AlphaEvolve were of unknown accuracy. Nevertheless, they were sufficient to give a strong indication that these constants decayed logarithmically to as :

The first main result of this paper is to confirm that this is indeed the case, in that

whenever is in lowest terms and not equal to , where are absolute constants. The lower bound was obtained by observing the shape of the examples produced by AlphaEvolve, which resembled a discrete Gaussian on a certain lattice determined by . The upper bound was established by an application of the “entropic Plünnecke–Ruzsa calculus”, relying particularly on the entropic Ruzsa triangle inequality, the entropic Balog–Szemerédi–Gowers lemma, as well as an entropy form of an inequality of Bukh.The arguments also apply to settings where there are more projections under control than just the projections. If one also controls projections for various rationals and denotes the set of slopes of the projections under control, then it turns out that the associated sum-difference constant still decays to , but now the key parameter is not the height of , but rather what I call the rational complexity of with respect to , defined as the smallest integer for which one can write as a ratio where are integer-coefficient polynomials of degree at most and coefficients at most . Specifically, decays to at a polynomial rate in , although I was not able to pin down the exponent of this decay exactly. The concept of rational complexity may seem somewhat artificial, but it roughly speaking measures how difficult it is to use the entropic Plünnecke–Ruzsa calculus to pass from control of , and to control of .

While this work does not make noticeable advances towards the arithmetic Kakeya conjecture (we only consider regimes where the sum-difference constant is close to , rather than close to ), it does highlight the fact that these constants are extremely arithmetic in nature, in that the influence of projections on is highly dependent on how efficiently one can represent as a rational combination of the .

New Nikodym set constructions over finite fields

I have uploaded to the arXiv my paper “New Nikodym set constructions over finite fields“. This is a spinoff of my previous project with Bogdan Georgiev, Javier Gómez–Serrano, and Adam Zsolt Wagner that I recently posted about. In that project we experimented with using AlphaEvolve (and other tools, such as DeepThink and AlphaProof) to explore various mathematical problems which were connected somehow to an optimization problem. For one of these — the finite field Nikodym set problem — these experiments led (by a somewhat convoluted process) to an improved asymptotic construction of such sets, the details of which are written up (by myself rather than by AI tools) in this paper.

Let be a finite field of some order (which must be a prime or a power of a prime), and let be a fixed dimension. A Nikodym set in is a subset of with the property that for every point , there exists a line passing through such that all points of other than lie in . Such sets are close cousins of Kakeya sets (which contain a line in every direction); indeed, roughly speaking, applying a random projective transformation to a Nikodym set will yield (most of) a Kakeya set. As a consequence, any lower bound on Kakeya sets implies a similar bound on Nikodym sets; in particular, one has a lower bound

on the size of a Nikodym set , coming from a similar bound on Kakeya sets due to Bukh and Chao using the polynomial method.For Kakeya sets, Bukh and Chao showed this bound to be sharp up to the lower order error ; but for Nikodym sets it is conjectured that in fact such sets should asymptotically have full density, in the sense that

This is known in two dimensions thanks to work by Szönyi et al. on blocking sets, and was also established in bounded torsion cases (and in particular for even ) by Guo, Kopparty, and Sudan by combining the polynomial method with the theory of linear codes. But in other cases this conjecture remains open in three and higher dimensions.In our experiments we focused on the opposite problem of constructing Nikodym sets of size as small as possible. In the plane , constructions of size when is a perfect square were constructed by Blokhuis et al, again using the theory of blocking sets; by taking Cartesian products of such sets, one can also make similar constructions in higher dimensions, again assuming is a perfect square. Apart from this, though, there are few such constructions in the literature.

We set AlphaEvolve to try to optimize the three dimensional problem with a variable field size (which we took to be prime for simplicity), with the intent to get this tool to come up with a construction that worked asymptotically for large , rather than just for any fixed value of . After some rounds of evolution, it arrived at a construction which empirically had size about . Inspecting the code, it turned out that AlphaEvolve had constructed a Nikodym set by (mostly) removing eight low-degree algebraic surfaces (all of the form for various ). We used the tool DeepThink to confirm the Nikodym property and to verify the construction, and then asked it to generalize the method. By removing many more than eight surfaces, and using some heuristic arguments based on the Chebotarev density theorem, DeepThink claimed a construction of size formed by removing several higher degree surfaces, but it acknowledged that the arguments were non-rigorous.

The arguments can be sketched here as follows. Let be a random surface of degree , and let be a point in which does not lie in . A random line through then meets in a number of points, which is basically the set of zeroes in of a random polynomial of degree . The (function field analogue of the) Chebotarev density theorem predicts that the probability that this polynomial has no roots in is about , where

is the proportion of permutations on elements that are derangements (no fixed points). So, if one removes random surfaces of degrees , the probability that a random line avoids all of these surfaces is about . If this product is significantly greater than , then the law of large numbers (and concentration of measure) then predicts (with high probability) that out of the lines through , at least one will avoid the removed surfaces, thus giving (most of) a Nikodym set. The Lang-Weil estimate predicts that each surface has cardinality about , so this should give a Nikodym set of size about .DeepThink took the degrees to be large, so that the derangement probabilities were close to . This led it to predict that could be taken to be as large as , leading to the claimed bound (2). However, on inspecting this argument we realized that these moderately high degree surfaces were effectively acting as random sets, so one could dramatically simplify DeepThink’s argument by simply taking to be a completely random set of the desired cardinality (2), in which case the verification of the Nikodym set property (with positive probability) could be established by a standard Chernoff bound-type argument (actually, I ended up using Bennett’s inequality rather than Chernoff’s inequality, but this is a minor technical detail).

On the other hand, the derangement probabilities oscillate around , and in fact are as large as when . This suggested that one could do better than the purely random construction if one only removed quadratic surfaces instead of higher degree surfaces, and heuristically predicted the improvement However, our experiments with both AlphaEvolve and DeepThink to try to make this idea work either empirically, heuristically, or rigorously were all unsuccessful! Eventually Deepthink discovered the problem: random quadratic polynomials often had two or zero roots (depending on whether the discriminant was a non-zero quadratic residue, or a nonresidue), but would only very rarely have just one root (the discriminant would have to vanish). As a consequence, if happened to lie on one of the removed quadratic surfaces , it was extremely likely that most lines through would intersect in a further point; only the small minority of lines that were tangent to and would avoid this. None of the AI tools we tried were able to overcome this obstacle.

However, I realized that one could repair the construction by adding back a small random portion of the removed quadratic surfaces, to allow for a non-zero number of lines through to stay inside the putative Nikodym set even when was in one of the surfaces , and the line was not tangent to . Pursuing this idea, and performing various standard probabilistic calculations and projective changes of variable, the problem essentially reduced to the following: given random quadratic polynomials in the plane , is it true that these polynomials simultaneously take quadratic residue values for of the points in that plane? Heuristically this should be true even for close to . However, it proved difficult to accurately control this simultaneous quadratic residue event; standard algebraic geometry tools such as the Weil conjectures seemed to require some vanishing of étale cohomology groups in order to obtain adequate error terms, and this was not something I was eager to try to work out. However, by exploiting projective symmetry (and the -transitive nature of the projective linear group), I could get satisfactory control of such intersections as long as was a little bit larger than rather than . This gave an intermediate construction of size

which still beat the purely random construction, but fell short of heuristic predictions. This argument (generalized to higher dimensions) is what is contained in the paper. I pose the question of locating a construction with the improved bound (3) (perhaps by some modification of the strategy of removing quadratic varieties) as an open question.We also looked at the two-dimensional case to see how well AlphaEvolve could recover known results, in the case that was a perfect square. It was able to come up with a construction that was slightly worse than the best known construction, in which one removed a large number of parabolas from the plane; after manually optimizing the construction we were able to recover the known bound (1). This final construction is somewhat similar to existing constructions (it has a strong resemblance to a standard construction formed by taking the complement of a Hermitian unital), but is still technically a new construction, so we have also added it to this paper.