Matematički blogovi

Theorem Update

A corrected version of Theorem no. 29, Gauss's Law of Quadratic Reciprocity, has been posted.

In stating the Law its dependence on the second variable q was ommitted (thanks to

John Drost for pointing this out to me).

Kategorije: Matematički blogovi

New Theorem

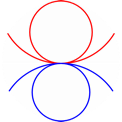

Theorem of the Day has a 'new acquisition': Wallis's Theorem

Kategorije: Matematički blogovi

News from Theorem of the Day

Theorem of the Day now has an FAQ page.

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': Vaughan Pratt's Theorem

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': Strassen's Matrix Theorem

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': a Theorem on Rectangular Tensegrities

Kategorije: Matematički blogovi

News from Theorem of the Day

Theorem of the Day has an embryonic international

listing of public events on mathematics to which everyone is welcome to submit items

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': the Piff-Welsh Theorem

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has two 'new acquisitions': Sylvester's Law of Inertia and

The Robinson-Schensted-Knuth Correspondence

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': Lieb's Square Ice Theorem

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': The Contraction Mapping Theorem

Kategorije: Matematički blogovi

Theorem Update

Theorem no. 70, on vertex-degree colourability of graphs, has been updated to reflect the striking

reduction in the upper bound recently proved by Kalkowski, Karonski and Pfender

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': The Panarboreal Formula

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': The Sophomore's Dream

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': A Theorem of Schur on Real-Rootedness

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': Euclid's Prism

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': Woodall's Hopping Lemma

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': The Short Gaps Theorem

Kategorije: Matematički blogovi

Theorem Update

A new version of Theorem no. 90, Cardano's Cubic Formula, has been posted.

The new version has a much more explicit example of how to use the formula to solve cubic equations.

Kategorije: Matematički blogovi

New Theorem

Theorem of the Day has a 'new acquisition': de Moivre's Theorem

Kategorije: Matematički blogovi